Convolve

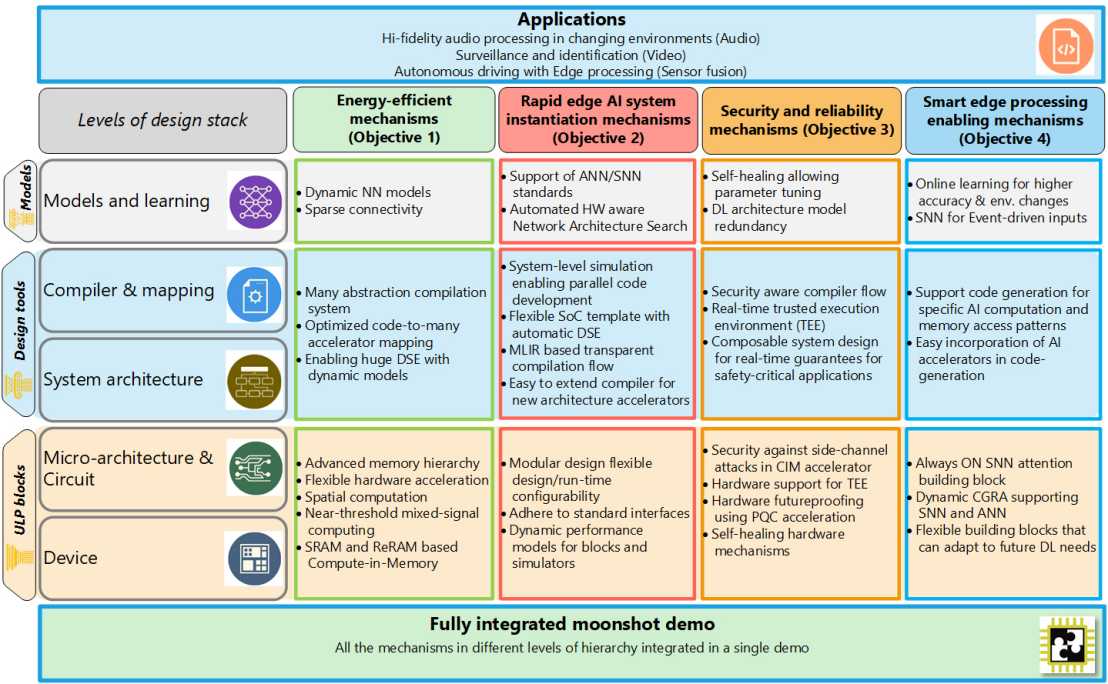

CONVOLVE enforces EU’s position in the design and development of smart edge-processors, such that it can become a dominant player in the global edge processing market. This requires a holistic approach in which it will address the whole design stack. This complex approach comes at a risk; however, this is balanced by high gains associated with mastering and integrating innovations at all levels. To enable this, CONVOLVE defines the following SMART detailed objectives and ensures them to be consistent with expected outcome and impact.

Objective 1

Achieve 100x improvement in energy efficiency compared to state-of-the-art COTS solutions by developing near-threshold self-healing dynamically re-configurable accelerators. This involves the development of an ULP library with novel architectural and micro-architectural accelerator building blocks, in short ULP blocks, having common or standard interfaces, and optimized at micro-architecture, circuit and device levels. Different architectural paradigms will be evaluated, such as Compute-in-Memory (CIM), Compute Near Memory (CNM), and Coarse-Grained Reconfigurable Arrays (CGRA), all keeping processing very close to the memory to reduce energy consumption. The accelerator blocks are optimized to execute the computation patterns of both Artificial Neural Networks (ANN) and Spiking Neural Networks (SNN) efficiently. To further reduce energy consumption, support for application dynamism will be provided in ULP blocks to dynamically adapt computational precision, data path width, early-termination, skipping layers/neurons, etc. Leakage will be reduced by advanced power management, and by using non-volatile ReRAM based crossbar units. Novel self-healing mechanisms will be introduced to deal with hardware variability at near-threshold region.

Objective 2

Reduce design time by 10x to be able to quickly implement an ULP edge AI processor combining innovations from the different levels of stack for a given application using a compositional ULP design approach. CONVOLVE researches efficient design-space exploration (DSE) techniques combining different levels of hierarchy in a compositional way, i.e., hardware and software components can be seamlessly glued together, while guaranteeing overall behaviour and reliability; this deals with the SoC heterogeneity and supports efficient mapping of applications to hardware architectures. Designing ULP accelerator blocks with common interfaces will allow these to be plugged into a modular architecture template. We will then generate an SoC architecture using these modular architecture templates after performing automated DSE; this allows the evaluation of all architecture possibilities.

Objective 3

Provide hardware security against known attacks and real-time guarantees by compositional Post Quantum Cryptography (PQC) and real-time Trusted Execution Environment (TEE). We will design PQC accelerator blocks with standard interfaces that can be plugged into a modular architecture template to make hardware secure, even in the long term (over a decade). Furthermore, CONVOLVE develops design-for-security shames and makes sure that all security features can be added in a compositional manner while providing real-time guarantees. We will explore design for robustness, to deal with in-field failures and non-ideal real-world environments.

Objective 4

Smart edge applications: CONVOLVE will develop smarter AI models to be combined with ULP accelerators. The project will explore AI models which dynamically adapt to the data input, such that the ‘common input case’ can be executed much more efficiently. This will dramatically reduce energy consumption. Furthermore, inspired by the redundancy and self-healing properties of biological brains, we enhance reliability by on-line (re-) learning, adapting parameters and weights on the fly. This requires new and cheap learning algorithms. Finally CONVOLVE investigates whether spiking neural networks (SNNs) could have an edge w.r.t. ANNs for certain application domains, especially for streaming input and always-power-on attention blocks.