BRAINSEE

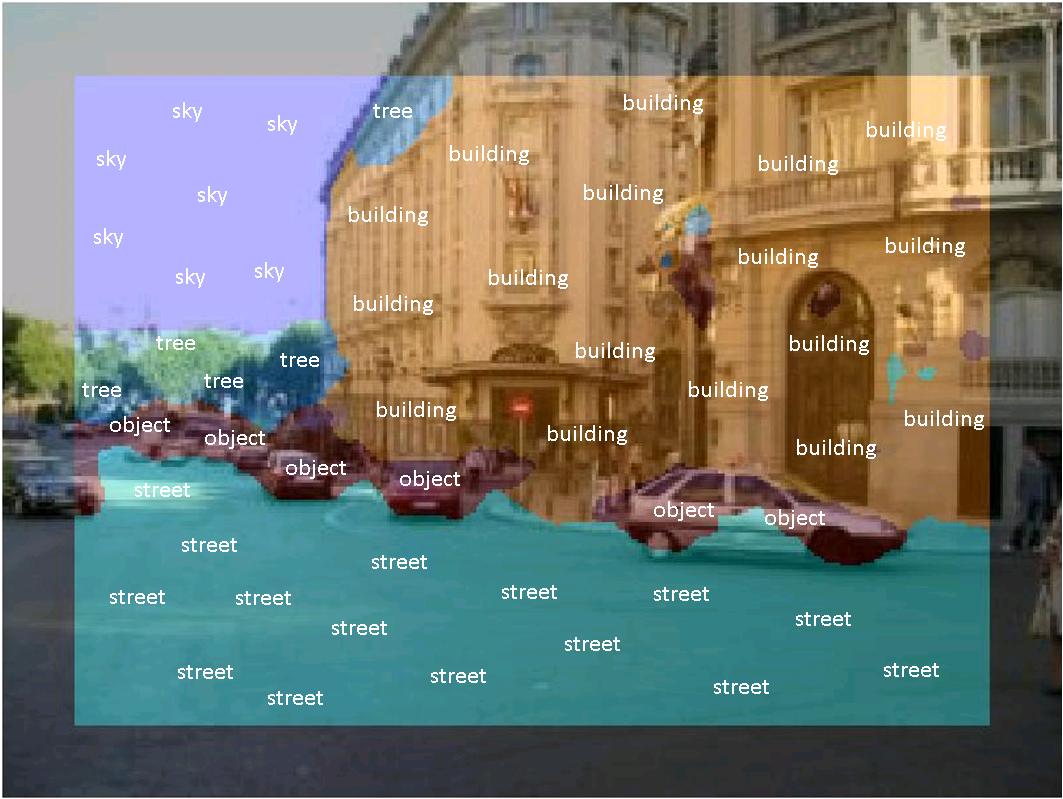

One of the major challenges of computer vision is to devise computationally affordable methods that automatically extract information in an integrated fashion. The Brainsee project focuses on exploring the potential of the recent wave of "brain inspired" computer vision approaches based on deep convolutional networks in applications requiring an embedded implementation. The final goal is to provide a flexible and scalable solution for distributed visual intelligence, where a significant part of the computer vision pipelines is offloaded to smart cameras deployed on the field. In this project, we investigate options to provide local visual intelligence by processing the data directly at the camera. We try to avoid postponing this analysis effort to a data center or a human operator, when it can be solved right at the source, also getting rid of the need for complex and expensive high-bandwidth networks. We are building a demonstrator of such an embedded solution which can be integrated into the camera, creating a "smart camera" able to identify and segment persons, cars, etc. in the scene.

We use brain-inspired deep learning to approach the algorithmic part of the task, which has been able to revolutionize the field of computer vision over the last few years. We have shown that the most promising deep learning approach, convolutional neural networks (ConvNets), can be very successfully applied to this task, achieving state-of-the-art results beyond existing solutions. ConvNets come with a huge computational burden, far beyond what is typically encountered in embedded systems. We look into developing optimized implementations, creating own hardware architectures, and jointly optimizing algorithms and implementation for a specialized processing system to be able to push the limits of intelligent surveillance systems on embedded platforms. Such optimizations involve the exploitation of the spatio-temporal locality and static setting found in surveillance scenarios and the inclusion of low-precision arithmetic with negligible accuracy loss and a signifcant reduction of the computational burden. The conceptually new ConvNets cannot only be used for object detection and classi cation or semantic segmentation, but they are able to solve also different computer vision tasks and are able to handle various data sources which can typically not easily be processed, such as multi-spectral imaging data. We also investigate these topics to see how far ConvNets can go to address complex computer vision tasks making use of the optimized soft- and hardware implementations we develop.

Links:

Selected student projects completed as part of this project:

- http://iis-projects.ee.ethz.ch/index.php/Improving_Scene_Labeling_with_Hyperspectral_Data

- http://iis-projects.ee.ethz.ch/index.php/High-speed_Scene_Labeling_on_FPGA

- http://iis-projects.ee.ethz.ch/index.php/FFT-based_Convolutional_Network_Accelerator